To my Angel.

Preface

In 1989, I went to college to study business informatics, or applied computer science. One of the classes I took was Software Engineering. It taught us the process of how to develop software.

In my first job out of college, I ran into problems applying this process. Subject-matter experts didn’t agree on the requirements. And when they did agree, they changed their minds, even after implementation was complete. During implementation, we’d find issues with the design. Budget and schedule overruns of 200% were common.

In my youthful ignorance, I blamed fickle customers. My second job was working for a product company, where I didn’t interact directly with customers anymore. That didn’t solve my problems, though. We had fixed scope and deadlines, resulting in poor quality and lots of time spent fixing bugs.

In my third job I read [Beck2000] and started to see a way out of the misery. I introduced eXtreme Programming into the organization and achieved some initial successes. At the first bump in the road, however, the CEO forbid us to do any more pair programming.

And so it continued with every job I had after that. I’ve never had the feeling that we as an industry have figured out how to do software development well. It has never felt like engineering to me.

This book is my attempt to survey the field and see how far away that ideal is.

The material that follows is in written form, because I firmly believe that writing is thinking. It’s a book rather than a blog post or series of posts because there is a lot of ground to cover. Writing this book is the most ambitious thing I’ve ever done. I may not even finish it, but I’m sure I’ll learn a lot on the way. And maybe it’ll help you too.

Ray Sinnema

November 2023

Introduction

This part of the book builds the foundations for the rest of the book. We start by introducing engineering and software. We then put them together to get a sense of what software engineering should look like. We conclude with a word on the organization of the book and the origin of its title.

Engineering

If the goal is for software development to be an engineering discipline, then we should understand what engineering means.

Engineering is the creation of cost-effective solutions to practical problems by applying scientific knowledge to build things in the service of mankind.

— [Shaw1990]

One of the essential parts in this definition is that engineering is about building things. Where science is about discovering how things are, engineering is about discovering a form for an artifact that ensures it serves its purpose [Brockman2009].

Another essential part is applying science. If the required science isn’t available yet, we can settle for codified knowledge instead. If there are no generally accepted principles and procedures at all, however, then it’s craft rather than engineering [Shaw1990].

Science

Science is a system of knowledge covering general truths or the operation of general laws, especially as obtained and tested through the scientific method.

The scientific method are principles and procedures for the systematic pursuit of knowledge involving the recognition and formulation of a problem, the collection of data through observation and experiment, and the formulation and testing of hypotheses.

The scientific method involves making observations, formulating hypotheses based on those observations, running experiments to falsify the hypotheses, and generalizing hypotheses into a cohesive theory. These activities form a loop:

flowchart LR Hypothesis --Design an\nexperiment to\ntest the hypothesis--> Experiment Experiment --Perform the\nexperiment--> Observation Observation --Create or modify\nthe theory--> Theory Theory --Use the theory\nto form a\nhypothesis--> Hypothesis

Scientific knowledge grows over time. Little discoveries here and there build on each other to reach truly impressive gains in understanding. Most of this progress is gradual. Every once in a while, however, a breakthrough leads to rapid progress. Some of these breakthroughs are the result of a different way of looking at things [Kuhn1970].

Applying science

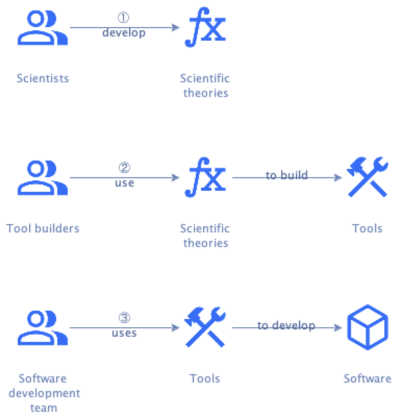

Application of the scientific method leads to scientific theories that engineering then applies to solve practical problems. For instance, electrical engineering applies the theory of electromagnetism [Jackson1999].

The methodical and iterative transition between scientific theory and its practical application successively develops and refines both the theory itself and its application. This forms the essence of good engineering practice [Voland2004].

Engineers must acquire broad and deep technical knowledge, which begins with an understanding of scientific principles. State-of-the-art designs require a deep understanding of one or more specialized engineering domains. A broad view of how such an area of expertise relates to other domains provides opportunities to apply knowledge in novel ways. Engineers must also be familiar with historical design failures to avoid repeating the mistakes of the past.

Engineers apply scientific theories by constructing models of their designs [Brockman2009]. A model is an approximation of a real system that responds in a similar way.

Since everything connects to everything, networks are important models. A graph is a visualization of a network, where the nodes are things and the edges are relationships between the things. Edges are either directed (with arrows) or undirected (without).

Here’s an example of an undirected graph:

graph LR a((a)) --- b((b)) b --- c((c)) c --- d((d)) c --- e((e)) c --- f((f)) e --- f f --- g((g)) b --- h((h)) h --- i((i)) c --- i i --- j((j))

A concept map is a graph where the nodes represent concepts and the edges the relationships between them. Concepts maps are useful for organizing and structuring knowledge.

A system is part of a network inside a boundary [Brockman2009]. Everything outside the boundary is the environment. Systems can consist of subsystems, which are also systems. Systems are often more than the sum of their parts.

Systems are important for engineering because:

- They’re more robust: fewer dependencies means fewer things that can go wrong.

- They’re easier to reason about: instead of having to understand everything inside a subsystem, we can temporarily forget about irrelevant details.

- Engineers can re-use existing designs when incorporating subsystems into the systems they’re designing.

Many systems are hierarchical in nature. A graph of such a system is a tree, which is usually drawn upside-down, with the root at the top. A node directly above another node is a parent node and the node below it its child. A node without children is a leaf. A node that’s neither the root nor a leaf is an intermediate node.

A parts hierarchy or structural hierarchy is a tree where all relationships are has-part.

A class hierarchy or taxonomy is a tree where all relationships are is-a.

Design process

Engineers focus on problems for which there are many practical solutions. They seek the best solution from among these alternatives. To help with that, they follow a procedure known as the engineering design process [Voland2004].

The artifacts to design have form and purpose, and the form must be appropriate for the purpose [Brockman2009]. A producer produces an artifact in the engineering environment, while an operator uses the artifact in the operating environment.

Operators have performance goals, or specifications. Producers have cost goals, or requirements. An engineer expresses goals quantitatively as constraints or objectives. A constraint is a hard limit, for instance water-resistant up to 20m. An objective is a desire for minimizing or maximizing a value, for example as thin as possible.

The engineering design process refines the form until it’s acceptable in both the operating and engineering environments. The design process to solve an engineering problem consists of the following steps [Voland2004] :

-

Needs assessment. Establish the need for a solution. This may be an unmet need or an improvement of an earlier solution with weaknesses or shortcomings.

The output of this phase is a design proposal, which justifies the need for a solution and expresses this need in precise and accurate terms. The design proposal lists the objective (why), background (who, where), method (how, when), expected results (what), and costs (how much).

The background describes the users to serve and the environment in which the solution must operate. It also evaluates existing solutions and prior work.

-

Problem formulation. Define the problem in the form of design goals that any viable solution must meet, using specifications and requirements.

The “real” problem to solve is usually different from the initial statement. Several heuristics help with discovering the “real” problem:

- The statement-restatement technique rewrites the initial statement in different ways to gain more insight into the real problem. You can use words, diagrams, or mathematical formulas.

- A why-why diagram places the initial statement on the left and possible underlying sources on the right. One can identify the sources for those sources again, etc. to get more and more specific.

- A Duncker diagram matches present and desired state. One keeps rewriting these until there is satisfactory correlation between them. Under each state one then lists solutions at three levels: general, functional, and specific. General solutions can either take an action to achieve the desired state, or transform the present state to make it acceptable.

- Kepner-Tregoe situation analysis, see below.

For most problems, the solution space is too large to search exhaustively. A more practical approach is to look at the problem state and desired solution state and develop a strategy for traversing the path between them.

One such a strategy is to decompose the problem into a set of design goals that any viable solution must achieve. Design goals can be generic (like safety, reliability, performance, minimum costs, etc.) or problem-specific. Some design goals require complete achievement (MUSTs), whereas others (WANTs) have associated quantitative boundaries within which solutions must fit.

Once you’ve settled on the design goals, you should prioritize them.

-

Abstraction and synthesis.

In abstraction, the engineer breaks the problem down into as many different functional parts as possible, where the subproblems ideally are independent.

Models help recognize what we know and what not about a problem and its solution. They can transform an unfamiliar problem into a set of recognizable subproblems that may be much easier to solve. Examples of models are miniatures, diagrams, sets of mathematical equations, and computer simulations. Models are approximations that leave out unnecessary detail.

Synthesis uses the building blocks identified during analysis to generate solutions to the original problem. Synthesis is the creative phase, so use creativity-stimulating techniques like brainstorming to generate ideas. Try to avoid rejecting impractical ideas outright, but mold them into revised forms that are feasible.

A morphological chart can help with synthesis. The rows correspond to design goals and the columns to different ways to achieve them. A solution is a combination of cells, one per column.

-

Analysis. Each design alternative has its own strengths and weaknesses. Establish objective evaluation criteria to evaluate them, including how easy the design is to implement and to misuse or abuse. Cost is almost always a criterion. You can use different measures for this, like Return on Investment (ROI) or Net Present Value (NPV).

Rank-order the design goals. Assign weights to them, either directly or indirectly via categories, like critical/important/optional.

Rate each design alternative on every evaluation criterion. If a natural way of scoring (like dollars for cost) is available, then use that. Otherwise, use a ranking scale, like excellent = 10, good = 8, etc. You may have to build prototypes to get the scores.

Add the scores to a decision matrix, where the rows represent the alternatives and the columns the criteria. For each criterion, multiply the alternative’s score by the weight. Then sum over all criteria to get a total score. Consider scores that differ less than 10% as ties. Select the best alternative.

Kepner-Tregoe analysis (see below) builds upon this basic approach.

-

Implementation. Develop the final solution, converting the design and raw materials into the desired product or system.

Materials have properties that we classify in categories, like mechanical, electrical, physical, chemical, thermal, and economic. The materials’ properties must match both the performance and functional requirements of the product and the processing requirements for its manufacture. Engineers must be aware of the properties of the various materials when they design alternatives to prevent coming up with infeasible designs.

During implementation, test whether the product does indeed meet its requirements before going into full-scale manufacturing. Distribute the finished product or system to the intended clients/customers/users. Activate any safety-critical subsystems first and start monitoring the system’s performance.

Receive feedback for the next-generation design. Since designing requires making trade-offs, there is seldom a perfect solution to the problem. Therefore, it’s common to repeat the whole process multiple times, each time incorporating learnings from real-world usage.

-

Reflection. Contemplate the lessons learned and knowledge acquired and optionally write a report on the project.

The problem itself, or at least the engineer’s understanding of it, evolves during this process. The design process is therefore not a linear sequence of steps, but more of an iterative process.

The engineer can capture their growing understanding of the both the problem and the solution using a decision tree. A decision tree is a hierarchy where child nodes are alternative options to meet the goal of their parent node.

Kepner-Tregoe analysis

Kepner-Tregoe analysis consists of the following steps:

- Situation analysis. Identify the most urgent or critical aspects of the situation based on the criteria of timing (relative urgency), trend (expected growth pattern of the subproblem), and impact (severity of negative consequences). Rank each aspect of the problem on each criterion using High, Medium, or Low.

- Problem analysis. Now look at the problem in the dimensions of characteristics (what), timing (when), location (where), and magnitude (how much). For each dimension, look at the positive and negative, then at the difference. For instance, ask what the problem is and what it’s not, how those answers differ, and what causes the distinction. Looking at all these different angles helps to determine the causes of the problem.

- Decision analysis. Divide the design goals into MUSTs and WANTs. Reject alternatives that fail to meet all MUST goals. Rank the remaining alternatives based on their scores on the WANT goals, just like with a decision matrix.

- Potential problem analysis. Consider any risks or hazards associated with the winning alternative. Calculate the threat associated with each risk as the product of its probability and severity, and sum the threats. If the total threat associated with the winning alternative is too high, repeat for the second-best alternative, etc. until you find an acceptable solution.

Evolution of an engineering discipline

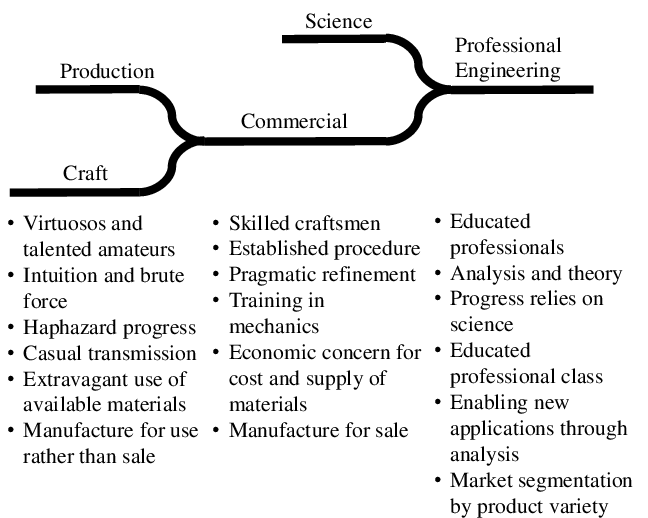

Engineering applies science, which takes time to develop. A new field of engineering therefore necessarily grows from humble beginnings. [Shaw1990] provides the following model of such evolution:

[Brockman2009] lists over 15 different engineering disciplines, like aerospace, chemical, civil, electrical, mechanical, and nuclear engineering. Before we judge whether software engineering deserves to be on that list, let’s look at software in more detail.

Software

Software is a program for a computer.

A program is a sequence of coded instructions that can be inserted into a computer.

We distinguish two types of software:

- System software: operating systems, device drivers, and utilities.

- Application software: productivity software, graphics software, databases, browsers, games, and the like.

System software is essential for the functioning of a general-purpose computer, managing hardware and providing a platform on which application software runs. System software provides value to the end user indirectly, through application software.

Most of what follows should be applicable to both categories. In case of conflict, however, we’ll focus on application software, because the majority of software falls into that bucket.

In summary, software consists of instructions for a computer that tell it what to compute. Let’s look at the science of computing next.

Computing

Automata theory is the study of abstract computing devices, named machines or automata [Hopcroft2007]. The theory formally defines different types of automata and derives mathematical proofs about them.

Finite automata

The simplest types of automata are finite automata. Formally, a Deterministic Finite Automaton (DFA) is a tuple , where

- is a finite set of states the automaton can be in.

- is a finite set of symbols, called the input alphabet of the automaton.

- is the transition function mapping states to successor states while consuming input.

- is the start state.

- is the set of accepting states.

We can visually present a DFA using a transition diagram. For instance, the DFA may look like this for a suitable :

stateDiagram-v2 direction LR [*] --> p p --> p: 1 p --> q: 0 q --> q: 0 q --> r: 1 r --> r: 0,1 r --> [*]

An alternative description of a DFA uses a table format. A transition table shows inputs as rows, the current states as columns, and next states in the intersection of the two. For example, the PDA above looks like this:

| p | q | r | |

|---|---|---|---|

| 0 | q | q | r |

| 1 | p | r | r |

Let be a word made up of symbols such that . If there are transitions in such that , , etc. and , then accepts . The set of all words that accepts is the language of , .

For instance, the language of the automaton above is the set of strings composed of s and s that contain the substring .

Languages accepted by DFAs are regular languages. Regular languages have many applications in software. For instance, they describe keywords and valid identifiers in programming languages or the structure of a URL. They’re also useful in searching documents and describing protocols.

A Nondeterministic Finite Automaton (NFA) is like a DFA, except returns a subset of rather than a single state. In other words, an NFA can be in more than one state at the same time. It’s possible to convert an NFA to a DFA, so the languages accepted by NFAs are also regular languages.

An -NFA is an NFA with the extra feature that it can transition on , the empty string. In other words, an -NFA can make transitions without consuming input. It’s possible to convert an -NFA to a DFA as well, so the languages accepted by -NFAs are also regular languages.

Regular expressions are an alternative way of describing regular languages. They use the symbols of along with the operators (union) and (zero or more times) and parentheses. For instance, the regular expression defines the same language as the PDA above. We can convert regular expressions to DFAs and vice versa.

Regular languages can describe parts of programs, but not entire programs. The memory of a DFA is too limited, since it consists of a finite number of states. Let’s look at more powerful automata that define more useful languages.

Pushdown automata

A Pushdown Automaton (PDA) is an -NFA with a stack on which it can store information. A PDA can access information on the stack only in a first-in-first-out way. The stack allows the PDA to remember things, which makes it more powerful than a DFA. For instance, no DFA can recognize palindromes, but a PDA can.

Formally, a PDA is a tuple . We’ve seen most of these symbols already in the definition of DFAs. The new ones are:

- is the alphabet of stack symbols, the information that can go on the stack.

- is the initial symbol on the stack when the PDA starts.

The transition function is slightly different. It takes the current state, an input symbol, and the symbol from the top of the stack as input. It outputs pairs consisting of a new state and a string of stack symbols that replace the top of the stack.

- This stack string can be , the empty string, in which case pops an element off the stack.

- It can also be the same as the top of the stack, in which case the stack remains the same.

- Or it can be a different string, even consisting of multiple symbols. In that case, the PDA pops the top symbol off the stack and pushes the output string onto the stack, one symbol at a time.

We can visualize PDAs using transition diagrams, just like DFAs. The edges show both the input symbol consumed and the old and new top of the stack. For instance, an edge labeled between nodes and means that contains the pair . Here, , where .

A PDA can accept a word in two ways:

- By final state, like for finite automatons.

- By empty stack, which is a new capability compared to finite automatons. In this definition, when the PDA pops the last symbol off its stack, the input it consumed up to then is a word that it accepts.

These two ways of accepting words and thus defining a language turn out to be the same. Suppose a PDA accepts by final state the language . We can construct a different PDA that accepts by empty stack precisely . The converse is also true.

We call the languages accepted by PDAs the context-free languages. Context-free languages, like regular languages, have important applications in software development. Before we dive into those, let’s look at an alternative way to specify the context-free languages: context-free grammars.

A Context-Free Grammar (CFG), or just grammar, is a tuple , where

- is a set of variables. Each variable represents a language, or set of strings. Variables are building block for the bigger language that the grammar defines.

- is a set of terminals. A terminal is a symbol in the language the grammar defines.

- is a set of productions. A production is of the form , where is the head and is the body. A body consists of zero or more variables and terminals.

- is the start symbol.

For instance, a grammar for the palindromes over and is:

Where is the following set of productions:

We can derive a word from a CFG . Start with its start symbol, and recursively replace variables using the productions until only terminal symbols remain. The set of words we can derive from a grammar is its language, .

A parse tree is a tree representation of a derivation in a CFG . The root of this tree is the start symbol of . For some production , there is a child under parent and these children are in order.

Here’s an example parse tree for that derives the palindrome :

graph TB A[P] B[0] C[P] D[0] E[1] F[P] G[1] H[0] A --- B A --- C A --- D C --- E C --- F C --- G F --- H

The leaves from left to right spell the derived word.

Languages we can derive from CFGs are precisely the context-free languages. For every CFG that defines a language , we can construct a PDA such that . The converse is also true.

Context-free languages can recognize programming languages. A parse tree of a CFG for a programming language describes a single program in that language. For instance, here’s a fictitional parse tree for the infamous program in C:

graph TB Program --- Function --- Declaration --- TypeSpecifier --- int Function --- CompoundStatement --- Statement --- ExpressionStatement --- CallExpression --- Identifier --- printf CallExpression --- StringLiteral StringLiteral[""Hello, world!""]

We now have the vocabulary to describe the structure of a program and of programming languages. However, PDAs aren’t powerful enough to describe the runtime behavior of all but the simplest programs. Let’s next look at automata that can.

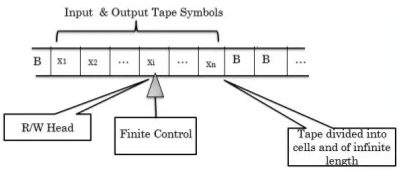

Turing machines

A Turing Machine (TM) is a generalization of a PDA where an infinite tape replaces the stack. This tape is a linear sequence of cells, one of which, the head, the TM points to. Initially, the input is on the tape, one symbol per cell, and the head points to the first input symbol. Left and right of the input, all cells are blank.

Formally, a TM is a tuple . We’ve seen most of these symbols already in the definition of previous automata. The new and changed ones are:

- is a set of tape symbols. These are analogous to the stack symbols of a PDA. Note that .

- is the blank symbol, where .

- The transition function takes a state and tape symbol as input. It produces a triple . Here is the next state. is the tape symbol written to the head. is the direction in which the head moves: = left and = right.

We can visualize TMs using transition diagrams, where edges are of the form . Here is the tape symbol at the head and is the replacement tape symbol. is the direction in which to move the head ( or ).

Several variations of TMs exist, such as those with multiple tapes or with the ability to keep the head in place. A non-deterministic variant exists as well. All these variations have the same power, in the sense that deterministic one-tape TMs can simulate them. Simpler models exists as well, like a PDA with two stacks, that can simulate a TM.

The languages TMs accept are the Recursively Enumerated (RE) languages. Like with the other types of languages, there are alternative models for expressing RE languages, for instance -calculus and general recursive functions. We call any model that accepts RE languages Turing-complete.

Real computers are Turing complete, if we assume the computer has access to an infinite number of disks of external storage. These disks simulate the TM’s infinite tape.

The Church-Turing thesis states that anything computable is computable by a TM. In other words, there can be no more powerful automata than TMs. Despite the lack of a formal proof, most people accept this thesis as true.

Programming a TM isn’t practical for solving real-world problems. The linear access model of a TM to its external storage, the tape, means the TM has to travel great distances. Real computers can access memory locations directly, which is much more efficient. Having said that, a TM is a useful abstraction to reason about computation.

Output

The transition function of an automaton gives the next state and, depending on the automaton, writes to external storage (stack or tape). We can change to also output something. A finite state machine that produces output is a transducer.

Conceptually, we can think of a transducer as a TM with two tapes: one for input and one for output. This implies that the output is a string of tape symbols from .

Output is often omitted in automata theory, which focuses on solving problems by accepting input. For real computer programs, however, output is crucial.

One may argue that the output of a TM is somewhere on its tape. This works for TMs and to some extent for PDAs, but not for DFAs. As we’ve seen, DFAs are useful in many situations in software development and some of those situations require output.

For instance, a tokenizer is a program that breaks a stream of text into individual tokens. These tokens may be part of a grammar, in which case we call the tokenizer a lexer or lexical analyzer. A program that analyzes text against a grammar and produces parse trees is a parser. The lexer must output the token it accepted, so that the parser can use it in its evaluations.

Model of software

Here’s a concept map of a software application based on automata theory:

graph Application --has--> State Application --allows--> Transition Transition --from/to --> State Transition --accepts--> Input Transition --produces--> Output Transition --reads from &\nwrites to --> ES[External storage]

This model is admittedly not super useful yet, but it’ll serve as the basis for later enhancements.

Now that we understand the basics of both software and engineering, let’s put these two together.

Software engineering

Software Engineering is the application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software; that is, the application of engineering to software.

The term software engineering was first used in the title of a NATO conference in 1968:

The phrase ‘software engineering’ was deliberately chosen as being provocative, in implying the need for software manufacture to be based on the types of theoretical foundations and practical disciplines, that are traditional in the established branches of engineering.

— [Naur1969]

Since then, people have worked towards this goal to make software development an engineering discipline.

SWEBOK

Some of that work has taken place at the Institute of Electrical and Electronics Engineers (IEEE). This professional association for electrical engineers and related disciplines discusses different knowledge areas in what they call societies.

The Computer Society (IEEE CS) “engages computer engineers, scientists, academia, and industry professionals from all areas of computing.” It also “sets the standard for the education and engagement that fuels continued global technological advancement.”

One of its publications is The Guide to the Software Engineering Body of Knowledge [SWEBOK].

The SWEBOK organizes material in knowledge areas (KAs):

- Software Requirements

- Software Architecture

- Software Design

- Software Construction

- Software Testing

- Software Engineering Operations

- Software Maintenance

- Software Configuration Management

- Software Engineering Management

- Software Engineering Process

- Software Engineering Models and Methods

- Software Quality

- Software Security

- Software Engineering Professional Practice

- Software Engineering Economics

- Computing Foundations

- Mathematical Foundations

- Engineering Foundations

Knowledge areas 16-18 provide the theoretical foundations. KA 10 describes the engineering process, while 1-9 cover the activities in that process. The other KAs deal with related topics.

Each knowledge area breaks down into topics and subtopics, summarizes the key concepts, and includes a reference list for detailed information.

IEEE CS stresses that SWEBOK is a guide to the body of knowledge for software engineering. The body of knowledge itself consists of the literature that SWEBOK references. Appendix C contains a list of 37 books that together cover all the KAs.

Is this actually engineering?

The SWEBOK uses the word “engineering” a lot, but is what it describes actually engineering? The year after SWEBOK V3 came out, Mary Shaw argued that what we’re doing in software development isn’t engineering [Shaw2015]. Alan Kay recently agreed [Kay2021]. This debate keeps flaring up from time to time [DeMarco2009], [Holub2023].

Some random data points further support this assessment:

- The Standish Group publishes a recurring CHAOS Report. The 2020 version states that 19% of IT projects are utter failures, and 50% fail in at least one way [Standish2020].

- The list of biggest data breaches ever shows that the top 10 all happened after publication of SWEBOK V3 [Statista2023].

These aren’t the results one would expect from engineering, so what’s going on? A complex question like that usually has more than one answer. Let’s look at some possibilities.

Management

Organizations achieve immensely different outcomes, even in level playing fields. Some organizations outperform their competitors in every important dimension, all at once.

[Kim2023] claims to understand why. The authors say all organizations are sociotechnical systems, where different types of work happen in different layers:

- The technical objects people work on, like source code.

- The tools and instrumentation through which people work on layer 1 objects, like compilers.

- The social circuitry: processes, procedures, norms, and routines, like software development processes.

They argue that performance in layer 3 dominates performance as a whole. In other words, winning organizations wire their social circuitry better. For instance, Agile methods outperform Waterfall [AgileVsWaterfall2023].

[Kim2023] offers the following tools to improve layer 3:

- Slowification to make it easier to solve problems.

- Simplification to make the problems themselves easier to solve.

- Amplification to make it obvious that there are problems that need solving.

Ignorance

Many software developers are unaware of the SWEBOK. I myself only found it when doing research for this book, and I’m an avid reader.

This problem goes beyond SWEBOK. For example, it’s been over 20 years since the [AgileManifesto], and there are still people doing waterfall because they don’t know any better.

Sometimes people do know better, but still stick to their old ways. This may be due to a lack of discipline, or it may result from poor management, as stated above. It’s also possible that they’re confused by too many options.

Too many options

The SWEBOK references more than one approach for most knowledge areas. Contrast this with Toyota. Its Toyota Production System (TPS) is an approach that combines all three tools for improving layer 3 [Kim2023].

Taiichi Ohno, TPS’s founder, says TPS is about reducing waste through just-in-time production and automation with a human touch [Ohno1988]. However, [Spear1999] shows that the real power of TPS stems from standardizing all work, while responding to problems by improving the standards. In other words, Toyota uses the scientific method, with the standards playing the role of scientific theory/hypotheses.

Is it possible to find one way to do software development that’s optimal in all situations? The answer is probably negative. [Spear1999] describes one Toyota plant with two divisions, where each division used a different approach. These approaches were the result of the divisions encountering different problems during their operation and solving them according to their unique situation.

And yet, there is an underlying way of working in those divisions. Companies following TPS have a common sense of what the ideal production system would be. Its output:

- Is defect-free (it has the features and performance the customer expects);

- Can be delivered one request at a time (a batch size of one);

- Can be supplied on demand in the version requested;

- Can be delivered immediately;

- Can be produced without wasting any materials, labor, energy, or other resources (such as costs associated with inventory); and

- Can be produced in a work environment that’s safe physically, emotionally, and professionally for every employee.

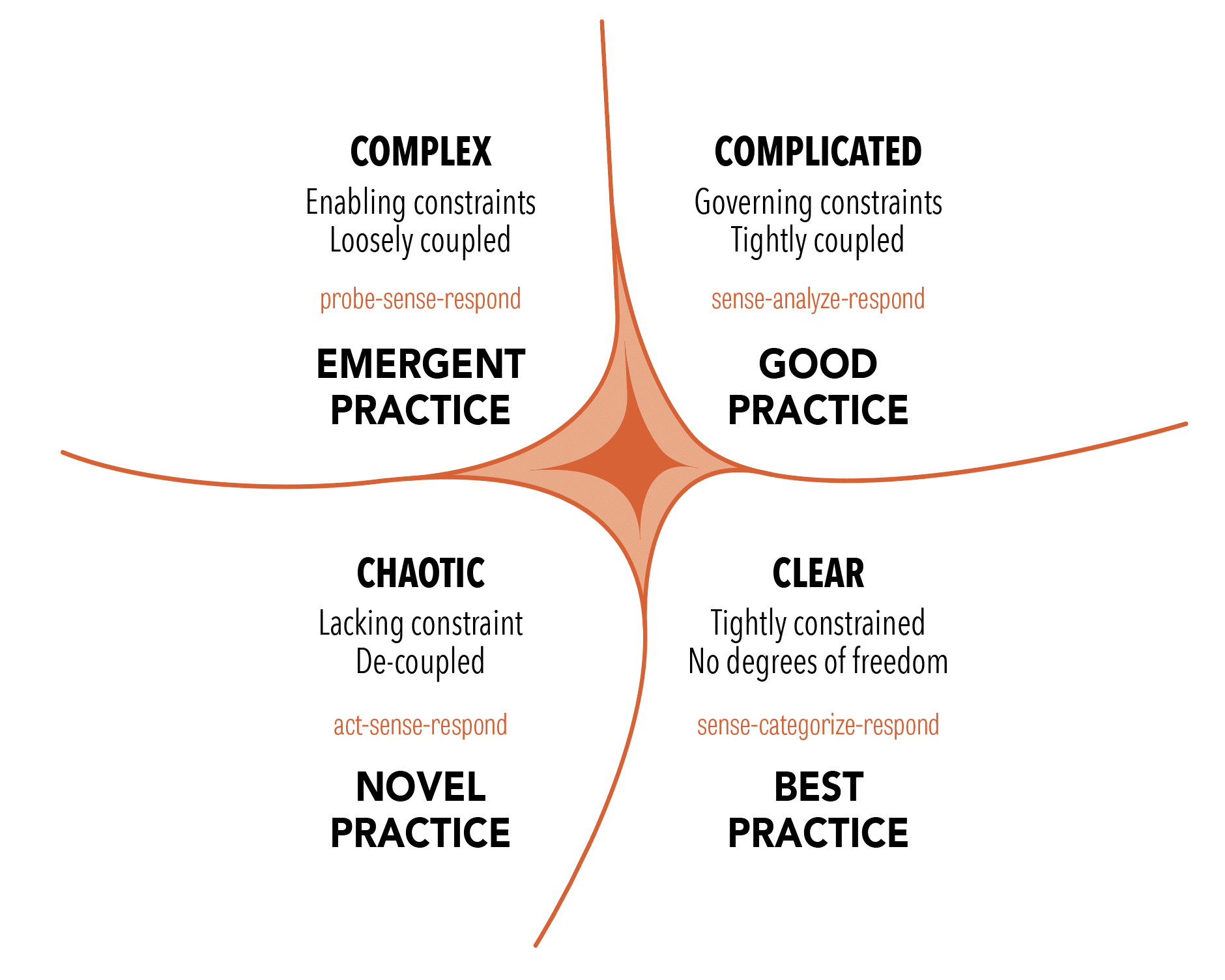

The Cynefin framework

Let’s look at this from the perspective of the Cynefin framework [Snowden2002] [Kurtz2003] [Snowden2007]. Here’s what its latest version looks like:

Software development isn’t in the Clear domain (formerly known as Simple and Obvious). Therefore, there are no best practices that everyone should follow no matter the situation. The question is whether software development falls in the Complicated or Complex domains.

Experts, such as engineers, rule the Complicated domain. The decision-making approach is to sense (get the facts), analyze, and respond. Here we use good, rather than best, practices, and can find multiple ways to success. This domain is the realm of the known unknowns, where at least one right answer exists.

In the Complex domain, there is no path to the one right answer. Here we’re in the realm of the unknown unknowns, where we can understand what happened only in retrospect. The best course of action is to probe (try something), sense (see patterns emerge), and respond. It must be safe to fail, or else people stop probing.

Consider a nuclear engineer designing a nuclear reactor. How often do they to have to adapt their design to the discovery of a new radioactive element or isotope? Never. If that did happen, they would throw away their design and start afresh.

Yet these types of requirement changes are what we see in software development all the time. This prompted the Agile movement to adopt a different way of working that embraces change [AgileManifesto].

That’s a sign that at least some parts of software development fall in the Complex domain. Signs aren’t proof, however. In fact, different aspects may be in different domains. This matters, because we should approach problems in the two domains differently.

Let’s look at the SWEBOK knowledge areas through that lens and see what we can learn.

The modern synthesis

If software engineering is to be the application of science to solve software development problems, then we need a science of software development. Some pieces of that are available to us, but we still need to synthesize a coherent picture.

Something similar happened in biology in the 20th century.

Evolution

Biology is the scientific study of life. While it has a broad scope, there is a unifying theory:

Nothing in biology makes sense except in the light of evolution.

— [Dobzhansky1973]

On a high level, evolution works as follows:

graph LR P[Parents &\noffspring] --Limited resources\nensure only the\nfittest survive--> Survivors Survivors --Survivors form\nthe new population--> Population Population --Parents produce\noffspring with\nsmall changes\nin traits--> Offspring Offspring --Offspring expand\nthe population--> P

Note how this process resembles the scientific method:

- The population is nature’s current theory on how to best adapt to their environment.

- Offspring inherit traits from their parents, but with small changes via mutation and, for species with sexual reproduction, crossover. In other words, each new generation contains several hypotheses on how to better adapt to the environment.

- Since resources are usually limited, only the fittest survive. Nature implicitly conducts experiments to falsify its hypotheses.

- The fittest organisms make up the new population, or current theory of how best to adapt to the environment.

The scientific theory of evolution rests on two pillars:

- Natural selection is the differential survival and reproduction of individuals due to differences in traits [Darwin1859] [Wallace1869].

- Heredity of traits is the passing on of traits from parents to their offspring with some changes [Mendel1866], [Fisher1930].

The two pillars of evolution were first brought together in the seminal book Evolution: The Modern Synthesis [Huxley1948].

The current book similarly aspires to bring together the various pillars of software engineering.

Book organization

The rest of this book attempts to answer the question of how to make software development a real engineering discipline.

We’ll first look into each of the SWEBOK knowledge areas to assemble the pieces. Then we’ll see if we can synthesize those into an actual engineering process.

Let’s get started.

Requirements

The SWEBOK gives [Wiegers2013] and [Sommerville2015] (chapters 3-5, 10, and 12) as the main reference material for the Software Requirements knowledge area. We first summarize that literature and then present a preliminary analysis.

ISO/IEC/IEEE 29148:2011(E) also covers requirements engineering [ISO29148].

Digest of requirements literature

In this section we review the generally accepted knowledge around requirements for software systems.

Definitions

Requirements are a specification of what should be implemented. They are descriptions of how the system should behave, or of a system property or attribute. They may be a constraint on the development of the system.

— [Sommerville1997]

[Wiegers2013] recognizes different kinds of requirements:

- Business requirements describe why the organization is developing a system; the benefits they hope to achieve in the form of a project vision, high-level business objectives, and success metrics.

- System requirements describe the requirements for a system that consists of multiple subsystems. An external interface requirement describes the connection between the system and other systems, hardware, or users.

- User requirements describe goals or tasks the users must be able to perform with the product to realize the business requirements.

- Functional requirements specify the behaviors the system must exhibit under specific conditions. They describe what the developers must implement to enable the user requirements.

- Quality attribute requirements or quality attributes describe the product’s characteristics in various dimensions that are important to stakeholders. Quality attribute requirements often apply to the system as a whole rather than individual features or services. They constrain the system, for instance by stating how fast it must respond.

These terms aren’t used consistently. For instance, [Sommerville2015] uses the term system requirement where [Wiegers2013] uses functional requirement. We’ll stick with the above definitions.

A stakeholder is a person, group, or organization that is actively involved in a project, is affected by its process or outcome, or can influence its process or outcome. Stakeholders can be internal or external to the project team and to the developing organization.

— [Wiegers2013]

Business rules, or domain requirements, are policies, guidelines, standards, or regulations that define or constrain some aspect of the business. They aren’t requirements themselves, but lead to requirements because they dictate properties that the system must have to conform to the rules.

A business process describes a series of activities that transform inputs to outputs to achieve a specific result. Business rules influence business processes by establishing vocabulary, imposing restrictions, and governing how to compute something.

A business rule falls into one of several categories:

- Facts are statements that are true about the business at a specified time. They describe associations between important business terms. Don’t go overboard with collecting facts; focus on the ones relevant to the scope of the product.

- A constraint restricts the actions that the system or (some of) its users may perform. It can be positive (something must happen or something must be true for something else to happen) or negative (something must not happen). Many constraints are about authorization, which you can capture using a roles and permissions matrix.

- An action enabler is a rule that triggers some activity if specific conditions are true.

These conditions may be complex combinations of simpler conditions.

A decision table captures action enablers in a concise way using

if-thenstatements. - An inference creates new facts from other facts.

If-thenstatements can capture inferences, where thethenpart specifies new knowledge rather than an action to take. - Computations transform existing data into new data using specific mathematical formulas or algorithms. Many such rules come from outside the organization, like tax withholding formulas. Capture computations in mathematical form or in decision tables.

Requirements engineering

Requirements engineering is the discipline that deals with requirements:

flowchart TB RE[Requirements\nengineering] RD[Requirements\ndevelopment] RM[Requirements\nmanagement] RE --> RD RE --> RM RD --> Elicitation RD --> Analysis RD --> Specification RD --> Validation

Requirements engineering is the realm of the business analyst (BA). This can be a job title or a role performed by people that also perform other roles. The analyst serves as the principal interpreter through which requirements flow between the customer community and the software development team [Wiegers2013].

For software used outside the organization that developed it, a product manager plays the role of business analyst. Both roles can also coexist, with the product manager focusing on the external market and user demands, and the BA converting those into functional requirements.

In Agile software development, the product owner plays the role of business analyst, although sometimes both roles are present.

Business analysts have their own body of knowledge [BABOK2015], including an extension for Agile software development [AgileBABOK2017].

Requirements development aims to collect good enough requirements to allow the team to start design and construction at an acceptable level of risk [Wiegers2013]. Requirements development is an iterative process of progressive refinement of detail.

Requirements engineering is a process and, like any process, we can improve it over time. Process improvement should be a continuous and evolutionary activity. Change is only accepted when people have an incentive to change. The biggest incentive is pain, so start by making problems visible by collecting metrics. These metrics serve as the baseline to compare improvements to. The Goal-Question-Metric (GQM) approach tells you which metrics to collect [Basili1982].

Perform root-cause analysis to determine improvement hypotheses. Pick one at a time and set clear goals for it. Treat improvement efforts as a mini-project, including proper planning, staffing, and change management. Make sure to revisit the goal to check whether the experiment worked using the metrics defined earlier. Keep in mind that change usually leads to an initial productivity drop while people figure out the new way of working [Satir1991]. Also, most metrics are lagging indicators, so it make take a while before improvements become visible.

Use a change budget to limit the amount of change to a level that people can absorb.

Elicitation

Excellent software is the result of well-executed design based on excellent requirements. Excellent requirements result from effective collaboration between developers and customers. This requires that all parties know what they need to be successful and understand and respect what their collaborators need. The business analyst forges this collaborative partnership.

A partnership requires that partners speak the same language, so learn the language of the business. Put together a glossary of terms, including synonyms, acronyms, and abbreviations.

A data dictionary stores more detail about terms in the glossary. It’s a shared repository that defines the meaning, composition, data type, length, format, and allowed values for data elements used in the application.

Stakeholders

In most cases, more than one category of user, or user class, exists. Some people call user classes stakeholder profiles or personas. User classes needn’t represent humans; they can also be external systems. Document user classes and their responsibilities, characteristics, numbers, and locations.

Direct users operate the product. Indirect users receive output from the product without touching it themselves.

The analyst works with the business sponsor to select representatives of each user class, known as product champions. Product champions gather requirements for all users in their class, so make sure they have the authority and trust required to do that. Ideally, product champions are actual users of the system.

If the product targets customers outside the organization developing the software, focus groups can take the place of product champions. A focus group is a representative group of users who convene to generate input and ideas for requirements.

The project’s decision makers must resolve conflicts between user classes. The primary stakeholders, also known as favored user classes, get priority.

Disfavored user classes are groups who aren’t supposed to use the product for legal, security, or safety reasons. Functional requirements for these user classes focus on making it hard for them to use the product. Examples are authentication to keep people from using the system at all, and authorization to prevent them from using specific features. In this context, some people talk about abuse cases that the system should prevent instead of use cases that make something possible.

Lack of adequate stakeholder involvement leads to an expectation gap, a gulf between what customers need and what developers deliver. To keep this gap to a minimum, arrange frequent contact points with product champions. Don’t limit this interaction to requirements, but involve users in as many activities as sensible.

Techniques

Elicitation is the process of identifying the needs and constraints of the various stakeholders. It focuses on how they do their work and how the system helps support that work. For any given project, you’ll probably need to use more than one of the following elicitation techniques:

- Hold interviews with individual stakeholders. Come prepared with questions and use active listening [Rogers1951]. When replacing an existing system, a good question is what annoys the user the most about it. It also helps to come with a draft model or prototype that the user can critique. Assign someone not actively participating in the discussion to take notes.

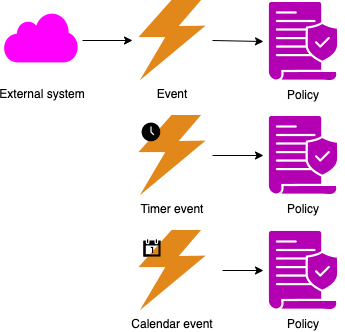

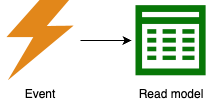

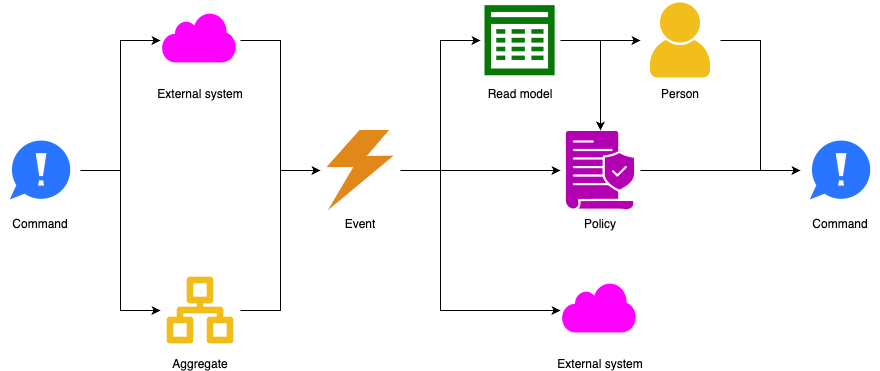

- Identify events. An event list identifies external events that trigger behavior in the system. Events originate from users, time, or external systems.

- Hold workshops with multiple stakeholders. These are especially useful for resolving disagreements, so hold them after using other techniques that surface those disagreements. Workshops may take on a life of their own, so refer to the business requirements to enforce scope and focus on the right level of abstraction for the session’s objectives. Smaller groups work faster than larger ones.

- Observe users do their work (ethnography). This helps understand the social and organizational context in which the work takes place. Limit sessions to two hours and focus on high-risk tasks. Use silent observations when you can’t interrupt users with questions.

- Distribute questionnaires. These are cheaper than alternatives when surveying large numbers of users. Their analysis can serve as input for other techniques that target smaller numbers of users.

- Analyze existing systems. Attempt to find the underlying need for offered features and assess whether the new system must address the same needs. Problem reports can give good ideas.

- Analyze existing documents. Examples are requirement specifications, business processes, user manuals, corporate and industry standards, and comparative reviews. Remember that documents may be out of date or incorrect.

- Analyze interfaces with external systems. This analysis gives technical requirements around data formats and data validation rules.

- Reuse requirements based on pre-existing business rules.

Different techniques work better for different user classes.

Elicitation is usually either usage-centric or product-centric. The usage-centric approach emphasizes understanding and exploring user goals to derive functionality. The product-centric approach focuses on defining features expected to lead to marketplace or business success.

A feature consists of one or more logically related system capabilities that provide value to a user and are described by a set of functional requirements.

— [Wiegers2013]

In usage-centric requirements elicitation, we capture user requirements in use cases. A use case describes a sequence of interactions between a system and an actor that results in value for the actor. An actor is a person or external system that interacts with the system.

A use case consists of one or more scenarios. The main success scenario describes the happy path of the interaction. Secondary scenarios, or alternative flows, describe variations in interaction, including those for error conditions. Each scenario has a description, trigger, preconditions, interaction steps, and postconditions. Exceptions describe anticipated error conditions and how the system should handle them.

Users may not be aware of all preconditions, so look to other sources as well. Business rules may drive some preconditions, like what role the user must have to perform the scenario. They may also define valid input values for or computations performed during the interaction steps.

Users know about those postconditions that relate to the value created for them, but those are usually not the only ones. Developers and testers often need postconditions that aren’t as visible to the user.

Activity or state diagrams can depict the interactions steps in a use case scenario.

The frequency of use gives a first estimation of concurrent usage and capacity requirements.

For products where the complexity lies outside user interactions, you may need other techniques besides use cases, like event analysis.

Stakeholders must establish acceptance criteria, predefined conditions that the product must meet to be acceptable. Without acceptance criteria, there is no way of knowing whether the product meets the requirement. Boundary values are especially interesting.

Use cases capture user requirements. They focus on the externally visible behavior of the system. To complete development, we need more information. The extra information takes the form of functional requirements that support the user requirements.

One example is about reporting. A use case may show that the system compiles a report for a user class, but not the details of the report. A report specification describes the purpose and contents of a report. A dashboard uses multiple textual and/or graphical representations of data that provide a consolidated view of a process. Dashboards and reports may show predictive as well as descriptive analytics, which require understanding the underlying models and statistical calculations.

Quality attribute requirements

Quality attributes define how well the systems works. Examples are how easy it’s to use, how fast it executes, and how often it fails. External quality attributes are important to users, while internal quality attributes are important to developers, operators, and support staff.

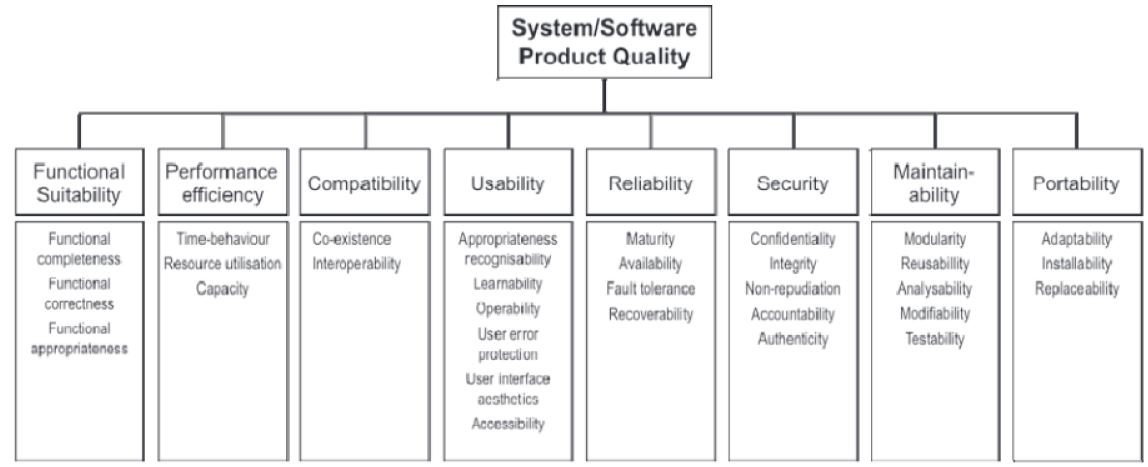

[ISO25010] defines eight quality characteristics, each of which consist of several quality attributes. Note that the first characteristic is functional suitability, which refers to functional requirements. ISO recommends you select a subset of quality attributes that are important for your system. For instance, hard real-time systems have stringent performance and efficiency requirements. Safety-critical systems place more emphasis on reliability.

Eliciting requirements for quality attributes is difficult. When given a choice, stakeholders always opt for the fastest, most reliable, most secure, etc. Ask them instead what defines unacceptable performance, reliability, security, etc.

The term dependability covers the related quality attributes of availability, reliability, safety, security, and resilience. Availability is the probability that the system is up and running and able to deliver useful services to users at any given time. Reliability is the probability that, over a given period of time, the system delivers correct services as expected by users. Safety is a judgement of the likelihood that the system doesn’t cause damage to people or the environment. Security is a judgement of the likelihood that the system can resist accidental or deliberate intrusions. Resilience is a judgement of how well the system can continue offering its critical services in the presence of disruptive events.

Quality attributes are emergent properties of the sociotechnical system, which contains hardware, software, and non-technical elements such as people, processes, and regulations. Sociotechnical systems are so complex that you can’t understand them as a whole. Rather, you have to view them as layers: equipment, operating system, networks, applications, business processes, organization, and society.

The society layer contains governments, which mandate that organizations follow certain standards that ensure products are safe and secure. Governments establish regulatory bodies with wide powers that enforce compliance with these rules.

A constraint places restrictions on the design or implementation choices available to developers. Constraints can come from stakeholders (like compliance officers), external systems that the product must interact with, or from other development activities, like transition and maintenance.

It’s easy to miss requirements:

- Assumed requirements are those that users expect without explicitly expressing them. Quality attribute requirements are often assumed.

- Implied requirements are those that are necessary because of another requirement.

- Different user classes have different requirements, so make sure to involve representatives of all user classes. For instance, the sponsor may not use the product directly, but may need KPIs that the product must collect measurements for.

- High-level requirements are often too vague. Decomposing them into more detail may bring to light other requirements, including implied ones.

- Another source of missed requirements stem from error conditions.

- A checklists of common functional areas may help to increase coverage.

Requirements may change as customers learn more and as the business evolves. See change management.

Try to keep design out of the requirements as much as possible. For instance, focus on user tasks rather than user interfaces. You can only go so far with that, however. For instance, sometimes you need to design (part of) the architecture to enable analysis of requirements. When dealing with systems of systems, you need to know whether the requirement is for the software or for a non-software component. You also need to know what the interface requirements are.

Reject the solutions that stakeholders often offer. Instead, describe the underlying needs that those solutions address. In other words, understand the job the customer is hiring the software to do [Christensen2016]. The Five Whys technique may help to go from a proposed solution to the underlying need [Ohno1988].

Reuse

It’s possible to reuse requirements, just like other software development artifacts. Reuse improves quality and increases productivity, but comes with its own risks, like pulling in unneeded requirements via links to related requirements.

Requirements reuse ranges from individual requirement statements to sets of requirements along with associated design, code, and tests. Reused requirements often need modification, like changing their attributes. You can copy requirements from another product or from a library of reusable requirements, or you can link them to a source. The latter makes it hard to change the reused requirements.

Glossaries and data dictionaries are good sources of reusable information. Common capabilities in products, like security features, are also good candidates for reuse. Software product lines, a set of software products in a family, share a lot of functionality and thus opportunities for reuse.

If the product replaces another system, then you’re always reusing requirements, even if implicitly. However, you shouldn’t carry over all requirements without evaluation. Look for usage data that allows you to remove features that are rarely used. Check features against the business objectives, since these may have changed. Also look for new requirements, including transition requirements. Remember that existing systems set expectations for quality attributes, like usability, performance, and throughput.

Requirement patterns offer a different form of reuse. They package considerable knowledge about a particular kind of requirement in a way that makes it convenient to define such a requirement. A pattern gives guidance about applicability, an explanation about the content in the requirement, and a template for a requirement definition. It also gives examples, links to other patterns, and offers considerations for development and testing.

While reuse saves you time, making requirements reusable costs extra time. Requirements management tools can make reuse of requirements easier and help with finding reuable requirements.

Analysis

Analysis involves reaching a richer and more precise understanding of each requirement and representing sets of requirements in multiple ways. A feature tree organizes features in logical groups and hierarchies.

Model the environment. A context diagram shows how the system fits in the ecosystem [Brown2016]. An ecosystem map is similar, but also shows external systems that the product doesn’t itself interact with.

Model the system. An analysis model is a diagram that depicts requirements visually, which sometimes makes it easier to find flaws. Analysis models blur the line between requirements and design, so be explicit about your intentions with a model.

The different analysis models each have their own strengths and weaknesses, so pick something based on your situation:

-

A Data Flow Diagram (DFD) shows the processes of a system, the data stores, any external systems, and the flows of data between them. You can nest DFDs by expanding a process into its own DFD. A level-0 DFD looks a lot like a context diagram.

-

A data model depicts the system’s data relationships. It provides a high-level view of the data, while the data dictionary gives the details. An Entity Relationship Diagram (ERD) is a common format for a data model. In an ERD, rectangles represent entities, the physical items, people, or aggregation of data. Entities are also known as records or data structures. Entities have attributes, which the data dictionary describes. Diamond shapes in the ERD represent relationships between entities. Numbers show the cardinality of the relationships.

Entities show up in data stores in a DFD. Their attributes appear in report specifications. A CRUD matrix correlates use cases with Create, Read, Update, and Delete actions on entities.

-

A swimlane diagram shows the steps of a business process or the operations of a software system. They consist of several lanes that represent different systems or actors executing steps. Swimlane diagrams can show what happens inside a process of a DFD. Standard notations are Business Process Model and Notation [BPMN2013] and Activity Diagrams [UML].

-

A State Transition Diagram (STD) shows state changes. We already saw this kind of model in the introduction. UML has a similar diagram known as a state machine diagram [UML]. Transition tables show the same information as STDs in matrix form. These models are especially relevant for real-time systems.

-

A dialog map shows navigation between screens of the system. It’s basically a user interface modeled as an STD. Dialog maps should show detailed screen layouts, but focus on the essence of the interactions.

-

A decision table lists the various values for all factors that influence the behavior of a system, along with the expected response. A decision tree shows the same information graphically.

-

An event-response table (aka event table, or event list) list all events that may occur in the system, along with the expected response. An event is a change or activity in the environment that requires a response from the system. A business event comes from a human user, a temporal event from the passing of time, and a signal event from hardware or an external system. The expected response depends on the system’s state. Event-response analysis is especially valuable for real-time systems.

-

Formal methods are mathematical approaches to software development where you define a formal model of the software. You may formally analyze this model to search for errors and inconsistencies, prove that a program is consistent with the model, or apply a series of correctness-preserving transformations to the model to generate a program. Large-scale automated theorem-proving software supports program proving. However, developing the proof obligations for theorem provers is a difficult and specialized task, so formal verification isn’t widely used.

The starting point for all formal methods is a mathematical model, which acts as the system specification. To create this model, translate the requirements, expressed in natural language, diagrams, and tables, into a mathematical language that has formally defined semantics. Constructing a formal specification forces a detailed analysis of the requirements and is an effective way of discovering requirements problems.

Different systems have differing needs for formality. Safety-critical, security-critical, and mission-critical elements of the system are good candidates for modeling using formal methods.

Prototypes

Prototypes are partial or preliminary implementations that make concepts and possibilities more tangible. Their main goal is to reduce risk, so only build them to address high-risk or high-impact issues. A prototype is an experiment to validate the hypothesis that requirements are sufficiently defined and that user interaction and architectural issues are sufficiently addressed. Seen from that lens, it makes sense to build several prototypes.

Prototypes focus either on user experience (mock-up) or technical soundness of a proposed approach (proof of concept).

Mock-ups, also known as horizontal prototypes, imply behavior without implementing it. They can be self-contained screens or a structure the user can navigate (dialog map). They often address the look and feel of the user interface. Mock-ups help stakeholders state requirements, because it’s easier to critique than to conceive, especially when it comes to completeness and errors.

A proof of concept, or vertical prototype, implements a slice of functionality from user interface through all the technical layers. Use it to test a proposed technical approach, or to optimize algorithms. Where a mock-up focuses on usability, proof of concepts focus on more technical quality attributes.

You can build mock-ups and proof of concepts with different precision.

Paper and electronic sketches or diagrams are low-fidelity prototypes used to explore functionality and flow. High-fidelity prototypes allow definition of a precise look and feel. Low-fidelity prototypes are faster to develop and thus allow for faster iteration on ideas. High-fidelity prototypes risk endless discussions about details, so remind everyone that we’re just trying to get the requirements right, not designing yet.

A throwaway prototype lives only as long as required to reduce risk. You build it as fast as possible, without regards for sound engineering practices. Stakeholders may pressure the team to grow a throwaway prototype into the final product, but this is seldom a good idea. It would be expensive to get it up to quality standards. Low-fidelity prototypes are less susceptible to this pressure than high-fidelity ones. Address this potential pressure up front by setting specific expectations about the purpose of the prototype, or what experiment you’re running. If you build a high-fidelity throwaway prototype, add in time delays to prevent stakeholders getting the wrong impression about performance.

An evolutionary prototype, in contrast, is an increment on the path towards a final product. It must therefore meet all applicable quality standards. When planned well, the first couple of increments can reduce risk just like for throwaway prototypes, although they take a bit more time to develop.

You’ll learn more from observing users work with a prototype than from asking them about it. Don’t forget to include all relevant stakeholders when evaluating a prototype.

Prioritization

Customers set requirement priorities based on the contribution towards business objectives. Priorities are especially important for quality attribute requirements, since conflicts between quality attributes are a fact of life. You need to define which quality attributes are most important for the system, so that developers can make proper trade-offs. Priorities may be different for different parts of the system.

Make sure to cover all stakeholders when setting priorities. It’s easy to forget about support staff, for example. Achieving consensus among all stakeholders may be challenging. Define a set of criteria upfront for judging whether one requirement has higher priority than another. Examples of such criteria are business value, technical risk, cost, time to market, and contractual commitments. In case of conflicts, favored user classes get preference.

One tool for resolving conflicts is a matrix of requirements against themselves, where the cells show which is more important. However, this approach becomes unwieldy for larger requirement sets.

A better tool is to divide all requirements into high, medium, and low. Assess each requirement on the dimensions of importance and urgency. Then urgent/important simplifies to high, not urgent/important to medium, not important/not urgent to low. Don’t do urgent/not important at all or, if you must, assign them low priority.

Most stakeholders assign high-priority to 85% of all requirements. To prevent that, run the important/urgent method again on just the high features, using the labels highest/higher/high instead of high/medium/low. Map high and higher to medium in the original set of requirements and keep only highest as high.

Quality Function Deployment (QFD) is a more rigorous technique. It’s based on the benefit provided by a feature, the penalty paid if that feature is absent, its cost, and implementation risk. Construct a matrix with these factors as columns and the requirements as rows. Each cell gets a value between 1 and 9, inclusive, and each factor gets a weight. Calculate the total value as the weighted average of benefit and penalty. Calculate the percentage of total cost and total risk as well. Then priority = value / (cost % + risk %).

Using QFD takes a lot of effort, especially since you need to calibrate the weights. You may want to spend this effort only for high priority requirements, as obtained by other methods, or when stakeholders can’t reach consensus.

Other prioritization techniques exists, like MoSCoW, or giving participants $100 to “buy” requirements. These often have considerable drawbacks, such as being open to gaming.

The priority of a requirement should be one if its attributes in the SRS.

A high-priority requirement may depend on a lower-priority requirement. In that case, the lower-priority requirement must come first despite its lower priority. Quality attribute requirements that affect the architecture should receive high priority, because rearchitecting is expensive.

Software has a cost, which developers estimate. Customers should respect those estimates. Some features may be expensive or even infeasible. Sometimes changing features can make them attainable or cheaper.

Priorities and cost estimates together make it possible to deliver maximum value at the lowest cost at the right time. Priorities may change over time.

Specification

Specification involves representing and storing the collected requirements knowledge in a persistent and well-organized fashion. We should record requirements in a shareable form, rather than using an oral tradition. They should also be version-controlled. Use templates for consistency.

Keep business rules separate from requirements, since their scope is wider. This allows reuse across products. Document the origin and expected frequency of change for each business rule. The business should own business rules, rather than the software development organization.

Specification documents

The Vision & Scope document contains the business requirements, scope, and business context. Other names for this document are project charter, business case document, or Market Requirements Document (MRD). Whatever the name, the business sponsor is the owner.

The vision provides a shared understanding of the desired outcome: what the project is about and should become. It applies to the product as a whole and should change only when the company’s business objectives do.

The scope defines what part of the vision the current project or iteration addresses. At a high level, it’s about what business objective to target. At a lower level, it’s about what features to include. Vision and scope together allow evaluating proposed requirements for project fit.

The vision and scope document also establishes priorities. Categorize the five dimensions (features, quality, schedule, cost, and staff) as either constraint, driver, or degree of freedom. Not all dimensions can be constraints or drivers; degrees of freedom allow responding to changes in requirements or project realities. For instance, many Agile methods treat schedule & quality as constraints, features & cost as drivers, and scope as a degree of freedom.

Collect functional and quality attribute requirements in a Software Requirements Specification (SRS). This practice enables downstream activities, like validation and change management. The SRS has different names in different contexts, like business requirements document, or functional specification.

The SRS refers to the Vision & Scope document. It also describes the user classes and any design and construction constraints, like which programming language to use or which standards to follow. Documenting assumptions may prevent serious issues. An assumption is a statement that the team believes to be true in the absence of proof.

Writing requirements down may be tedious, but the cost of doing so is small compared to acquiring that knowledge. Or relearning it in the future by new hires.

Learn just enough about requirements for prioritization, then flesh out more details when needed for design and construction. Label uncertain requirements as TBD and assign someone to resolve the issue.

Trace requirements back to their origin: business requirements, system requirement, or business rule. Record the stakeholders requesting each requirement.

Assign a unique ID to each requirement.

The best format for such IDs is a text-based hierarchical tagging scheme.

This practice gives rise to IDs like Product.Discount.Error.

Present requirements in different ways to stakeholders to reveal more insights. For instance, text vs diagram or use case vs acceptance test.

It’s often useful to group requirements by features (or even a feature tree) or by user class.

You may want to include a logical data model in the SRS, including how to collect, verify, process, protect, and destruct data. Descriptions of reports are also valuable.

Don’t forget to document quality attribute requirements, like for usability (including localization and internationalization), performance, and security. Also include requirements around migrations from existing systems.

To prevent recurring discussions, record rejected requirements and the reasons for their rejection.

The SRS should contain a glossary.

For a software product that’s part of a larger system, capture the system requirements in a Systems Requirements Specification (SyRS). Requirements in the SyRS may need decomposing into individual requirements for hardware, software, and humans.

Writing requirements

Excellent requirements are:

-

Correct. Requirements must accurately describe a capability that meets a stakeholder’s need. Formalize correctness using acceptance criteria.

-

Complete. Each requirement must contain all information necessary for validation and implementation. This includes what to do in case of errors.

-

Unambiguous. Natural language is prone to ambiguity, but is necessary since stakeholders can’t usually read formal specifications well enough to validate requirements.

Ambiguity comes in two forms. The first is when one person can see more than one way to interpret a requirement. The harder type is where different people each see only one interpretation each, but those differ from each other.

To reduce ambiguity, we often use semi-structured text to constrain the text a bit. Lists, tables, formulas, charts, and decision trees may be useful as well. Use terms consistently and as defined in the glossary. Synonyms are okay, as long as they’re in the glossary as well. Try to avoid adverbs, since they introduce subjectivity.

-

Necessary. Required functionality should provide stakeholders with business value in line with the business objectives for the product. This includes compliance with laws, regulations, and standards. Reject requirements that don’t contribute to the stated business objectives. Likewise, exclude business rules that don’t need implementing in software.

Requirements must come from a source that has the authority to provide requirements.

-

Feasible. It must be possible to implement the requirement in an economic fashion.

-

Prioritized. Again, economics come into play, this time to make sure we can work on the most important things first.

-

Verifiable. Write individually testable requirements, with a small number of related test cases each. The count of testable requirements is actually a metric for product size. Rephrase negative requirements into positives (where possible) so that they’re clearer and thus easier to verify.

Acceptance tests are programs that verify that the software meets its requirements. They map to individually testable requirements.